Previously, we have introduced how to use Azure OpenAI to develop a RAG (Retrieval-Augmented Generation) chatbot. In this article, we will learn how to create your own OpenAI endpoints in Azure Cloud.

Azure OpenAI is a service provided by Microsoft Azure that allows businesses and developers to integrate OpenAI’s advanced AI models into their applications and systems. This service gives users access to powerful language models like GPT-3, GPT-4, and other AI models developed by OpenAI, via an API hosted on Microsoft’s Azure cloud platform.

To use Azure OpenAI, users need an Azure subscription and access to the OpenAI API via Azure. Assume you already have an Azure Cloud account and an Azure subscription. Here are the steps to create your own OpenAI endpoints in Azure Cloud

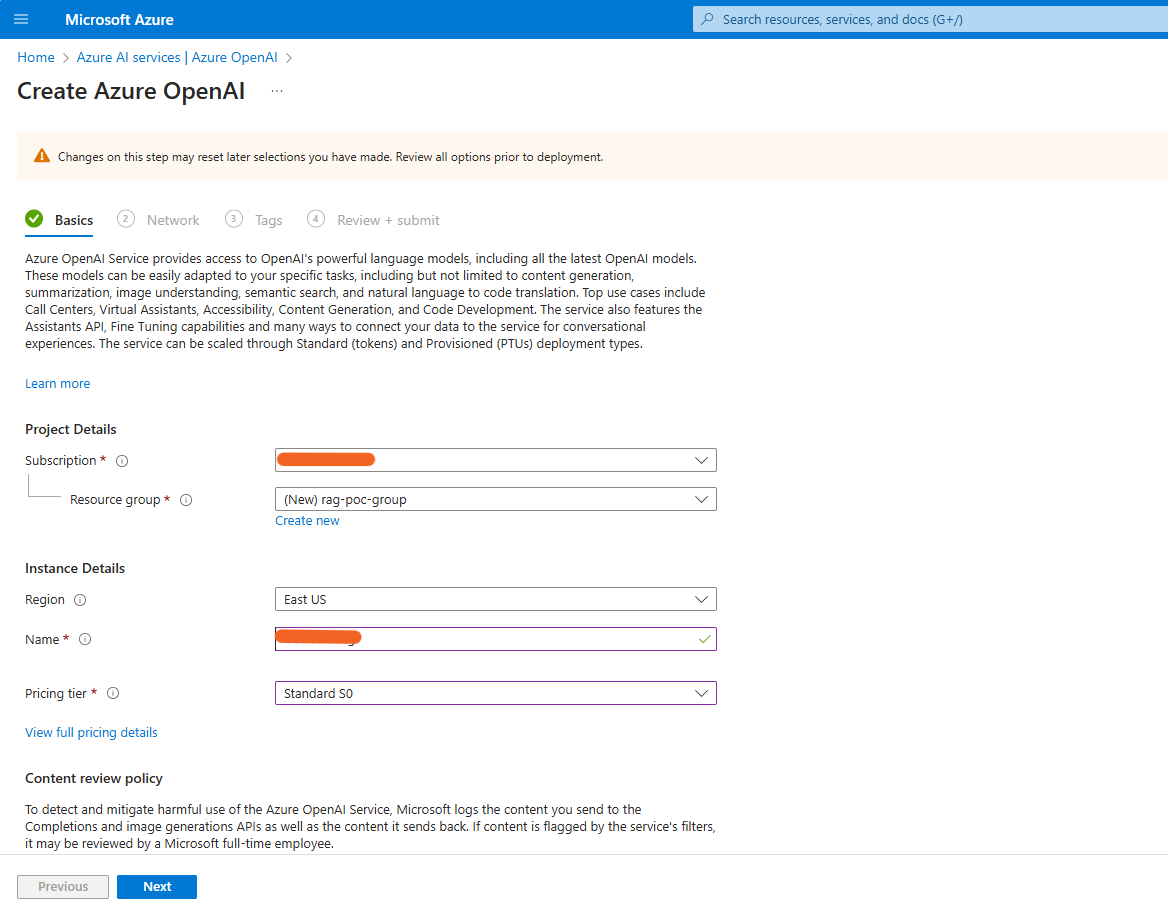

Step 1: Create Azure OpenAI Resource

In Microsoft Azure Cloud, Open the Azure AI Services and create a Azure OpenAI resource. Select your subscription and resource group, create a new resource group if you don’t have one. Fill the endpoint name and select the pricing tier.

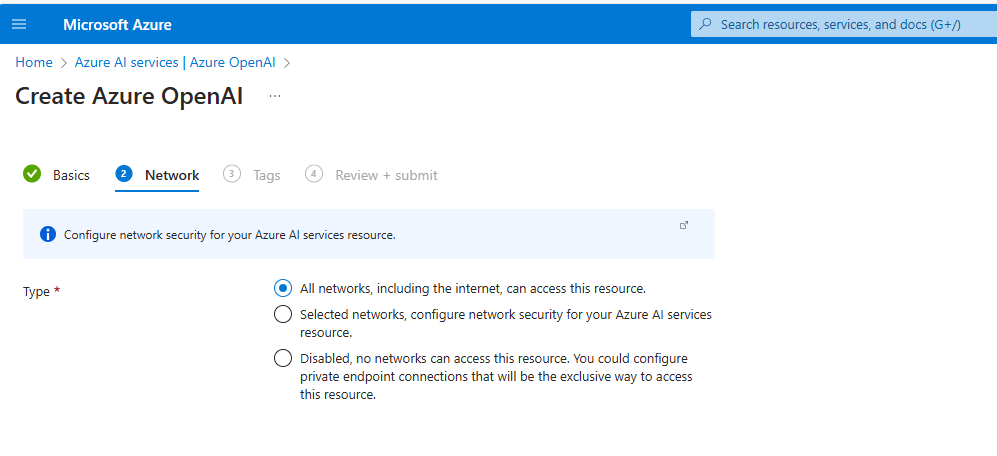

In Network, choose the All networks including internet option. because we want to access the OpenAI API from anywhere.

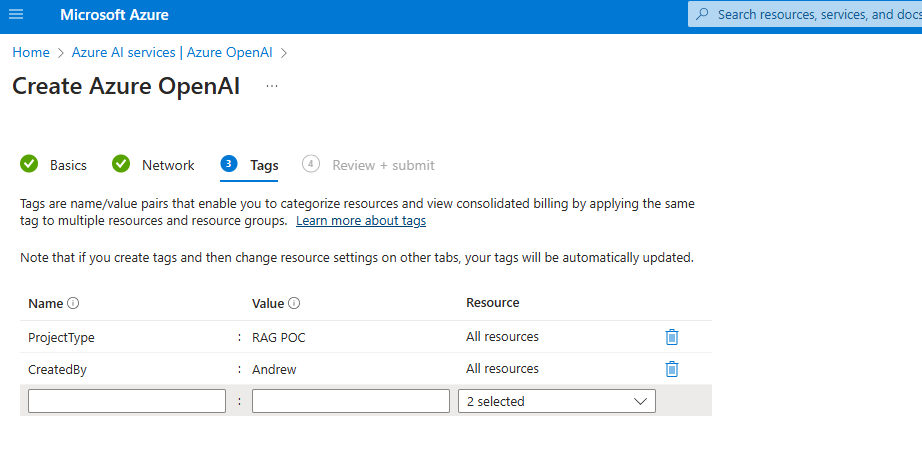

For the tags, you can type your own tags for this resource.

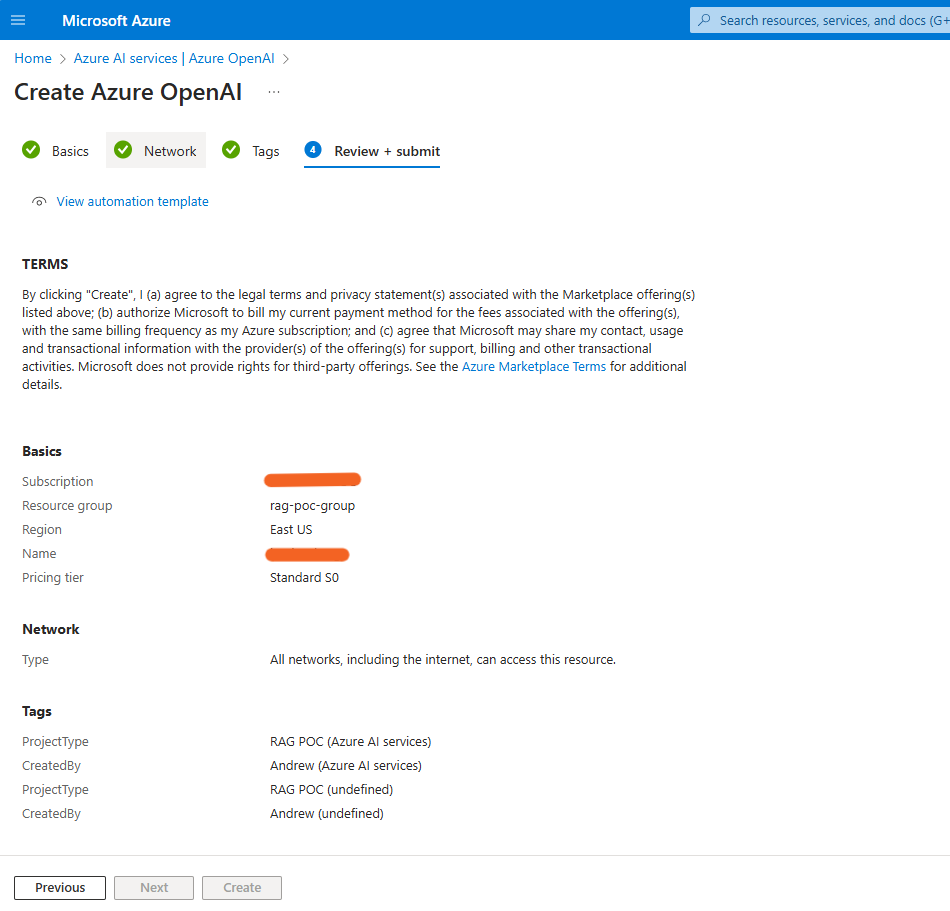

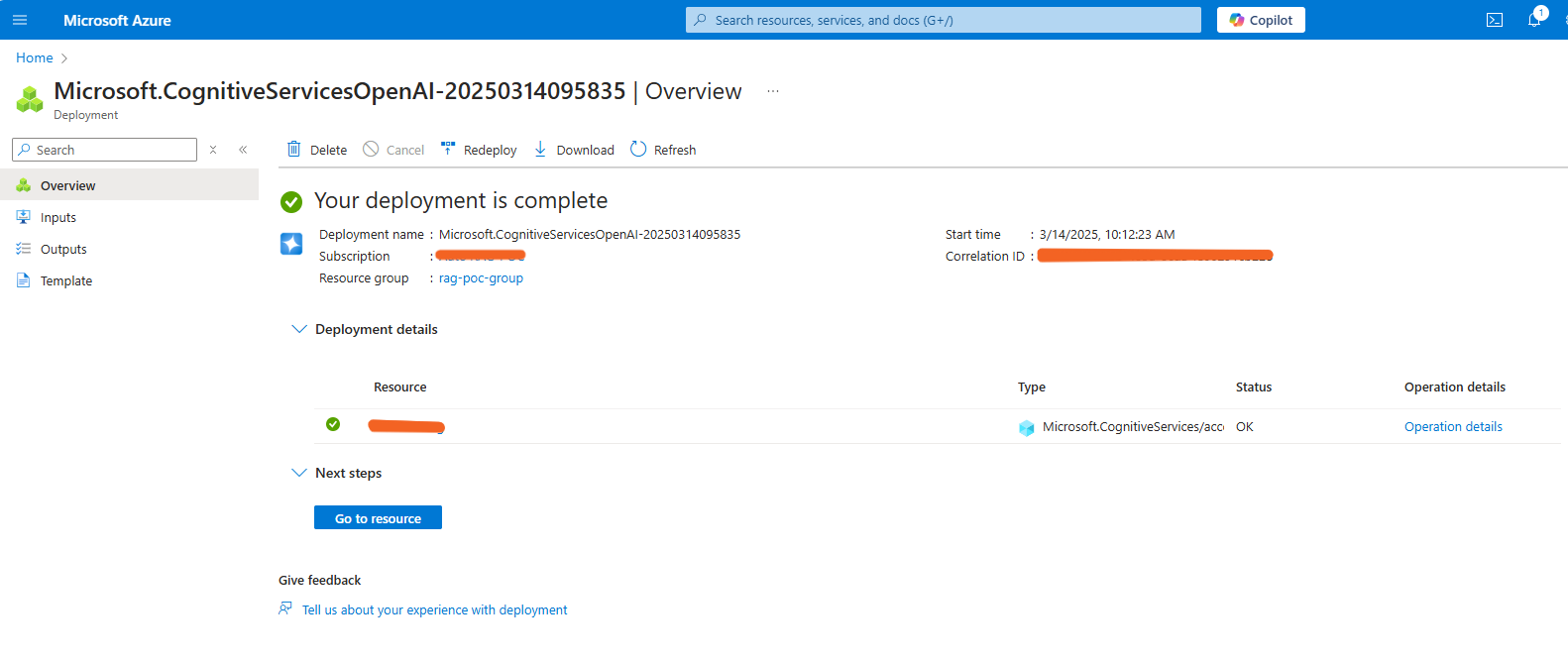

After that, you can review your resource and create it.

Deploying the resource can take a few seconds.

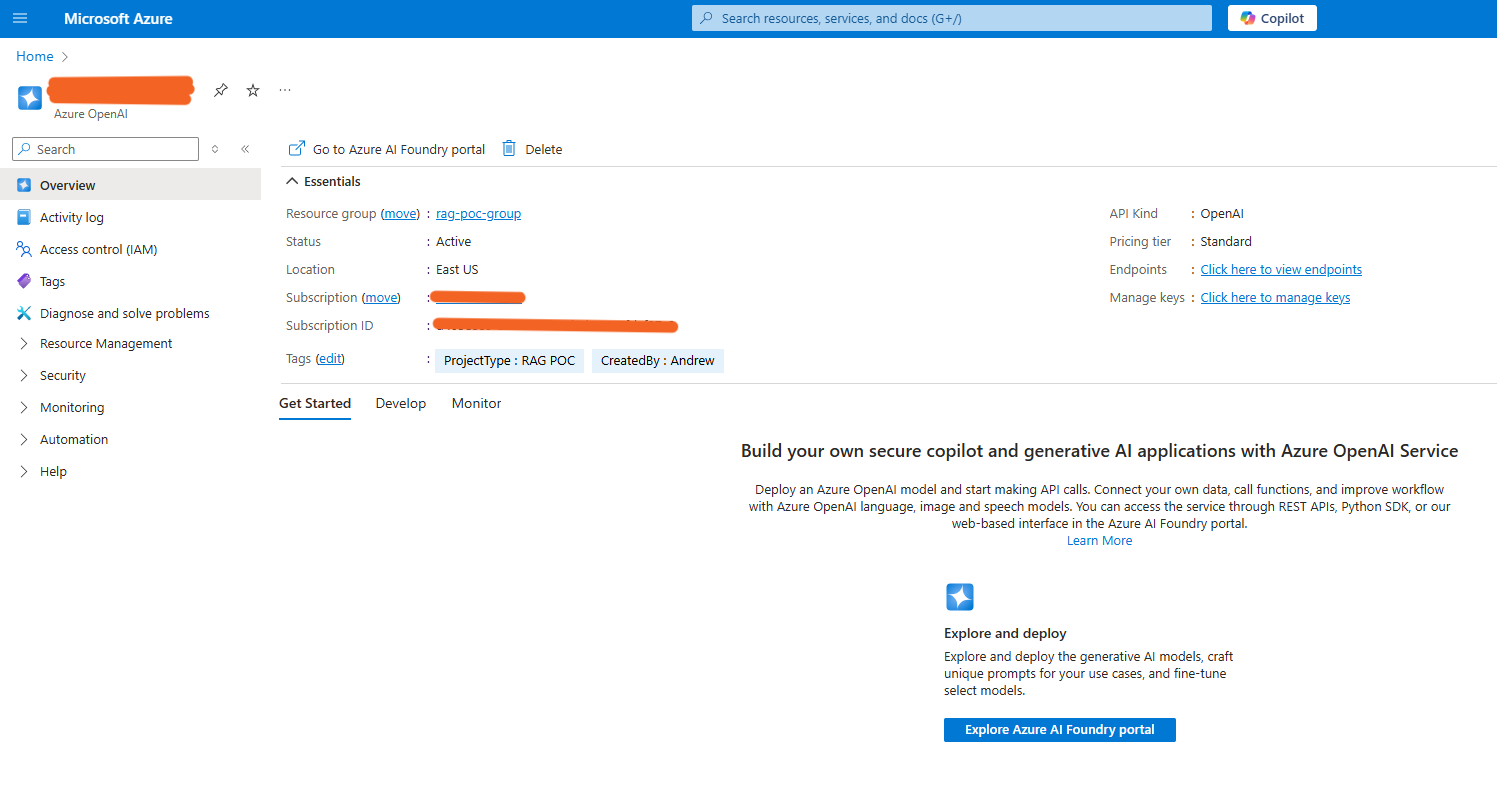

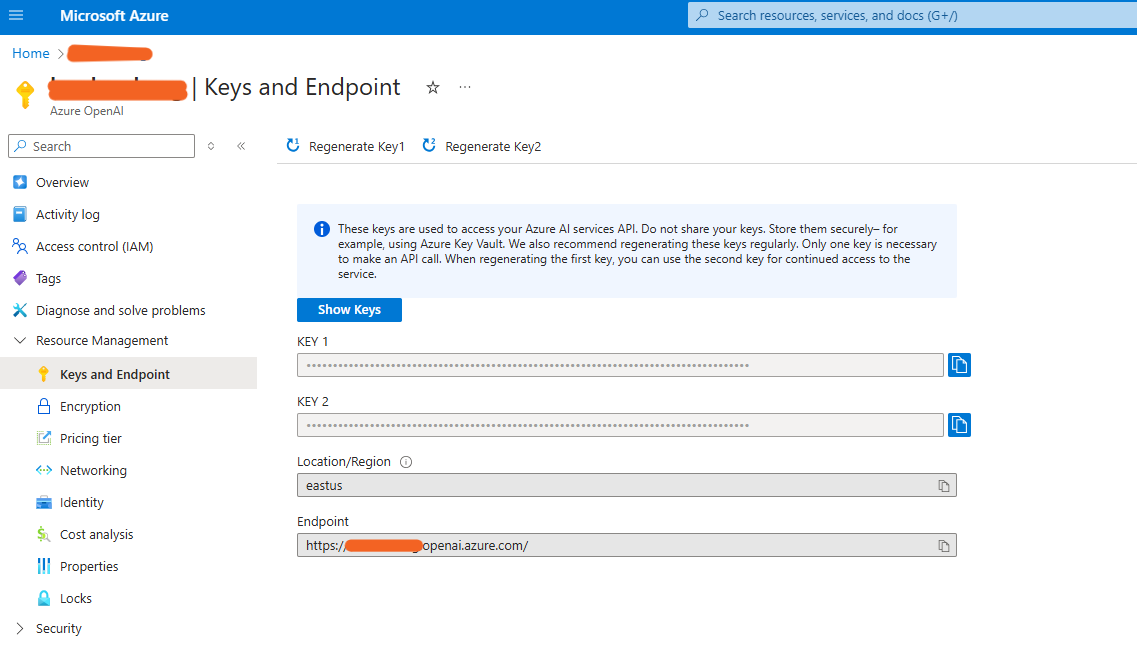

Once the resource is deployed, you can select your resource and click the Endpoints and Manage keys to see the API endpoint and key.

You can copy the API endpoint and key and use it in your application to access the OpenAI API.

Do you think we have finished to create our own OpenAI endpoints in Azure Cloud? The answer is No, We still need to deploy the OpenAI Model to our Azure OpenAI resource. If we access this API endpoint directly, we will get an error message. like below

1 | openai.NotFoundError: Error code: 404 - {'error': {'code': 'DeploymentNotFound', 'message': 'The API deployment for this resource does not exist. If you created the deployment within the last 5 minutes, please wait a moment and try again.'}} |

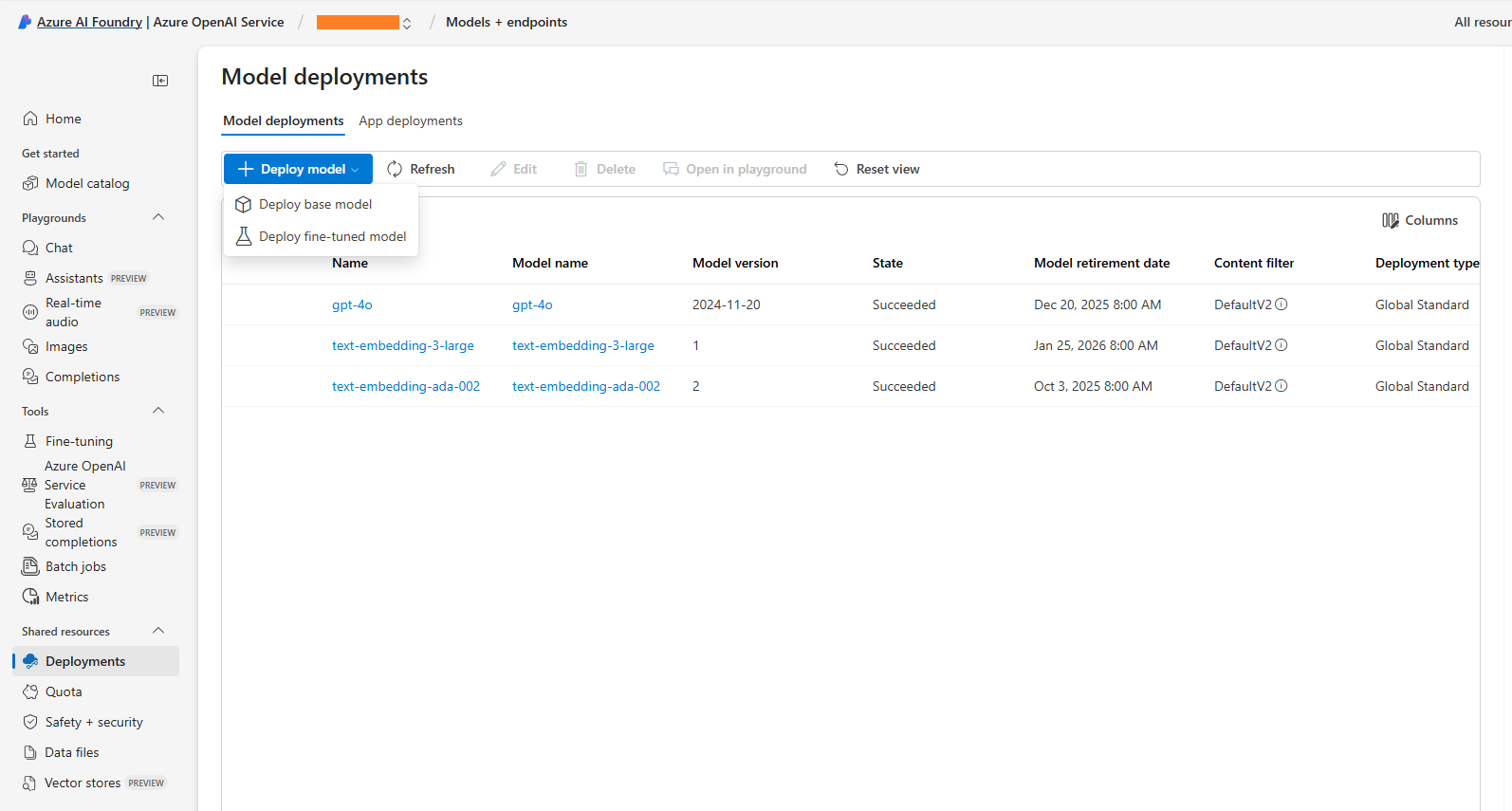

Step 2: Deploy OpenAI Model to Azure OpenAI Resource

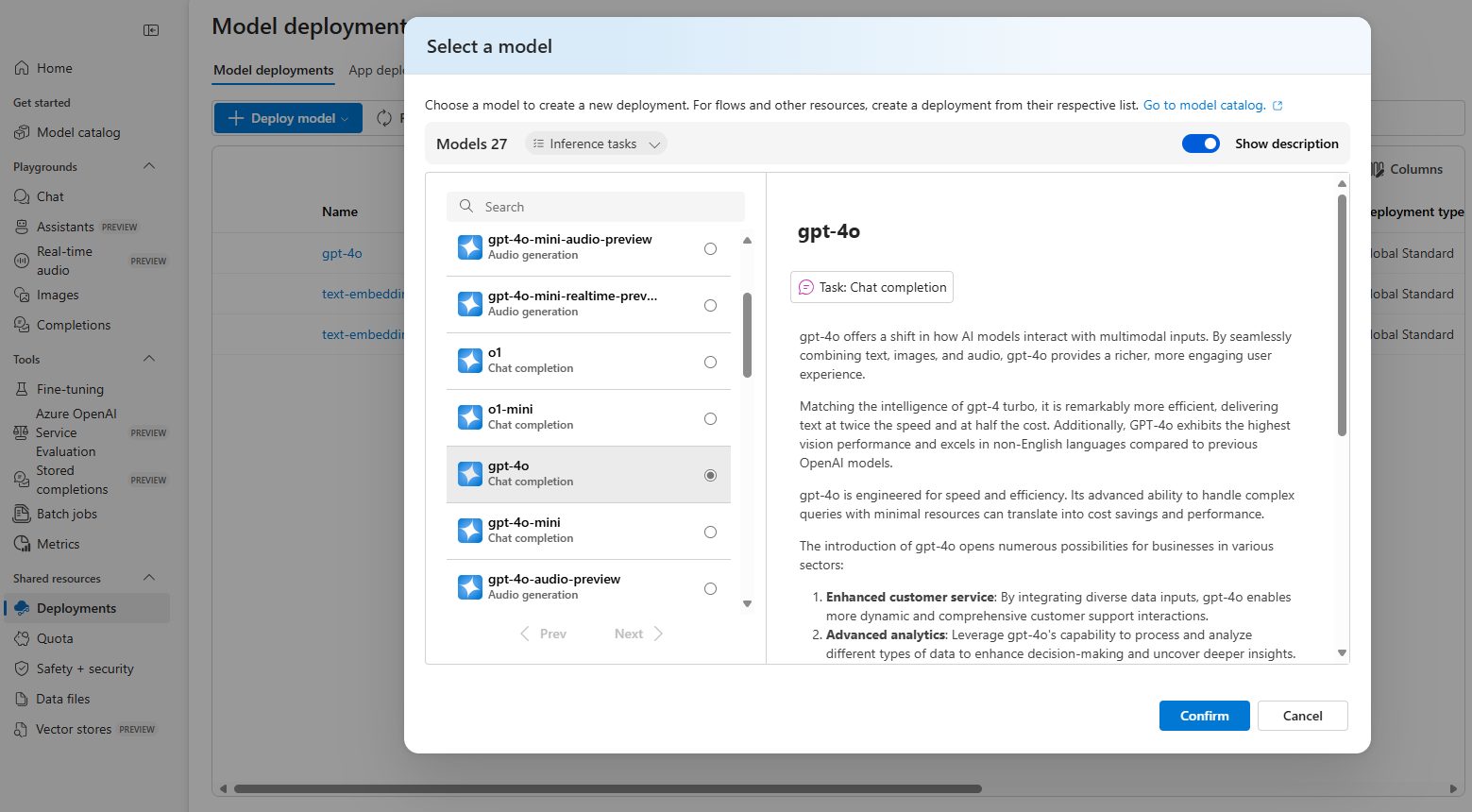

To deploy the OpenAI model to our Azure OpenAI resource, open Azure AI Foundry and select Deployments item in left menu. Click Deploy model button and select Deploy base model.

Let’s choose the GPT-4o model.

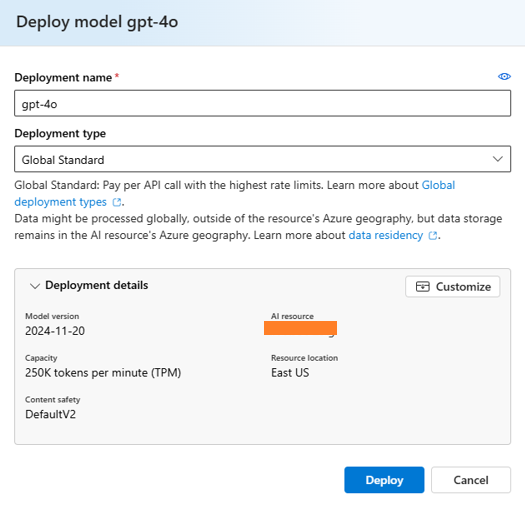

In deployment model dialog, let’s keep the deployment name and deployment type select as Global Standard.

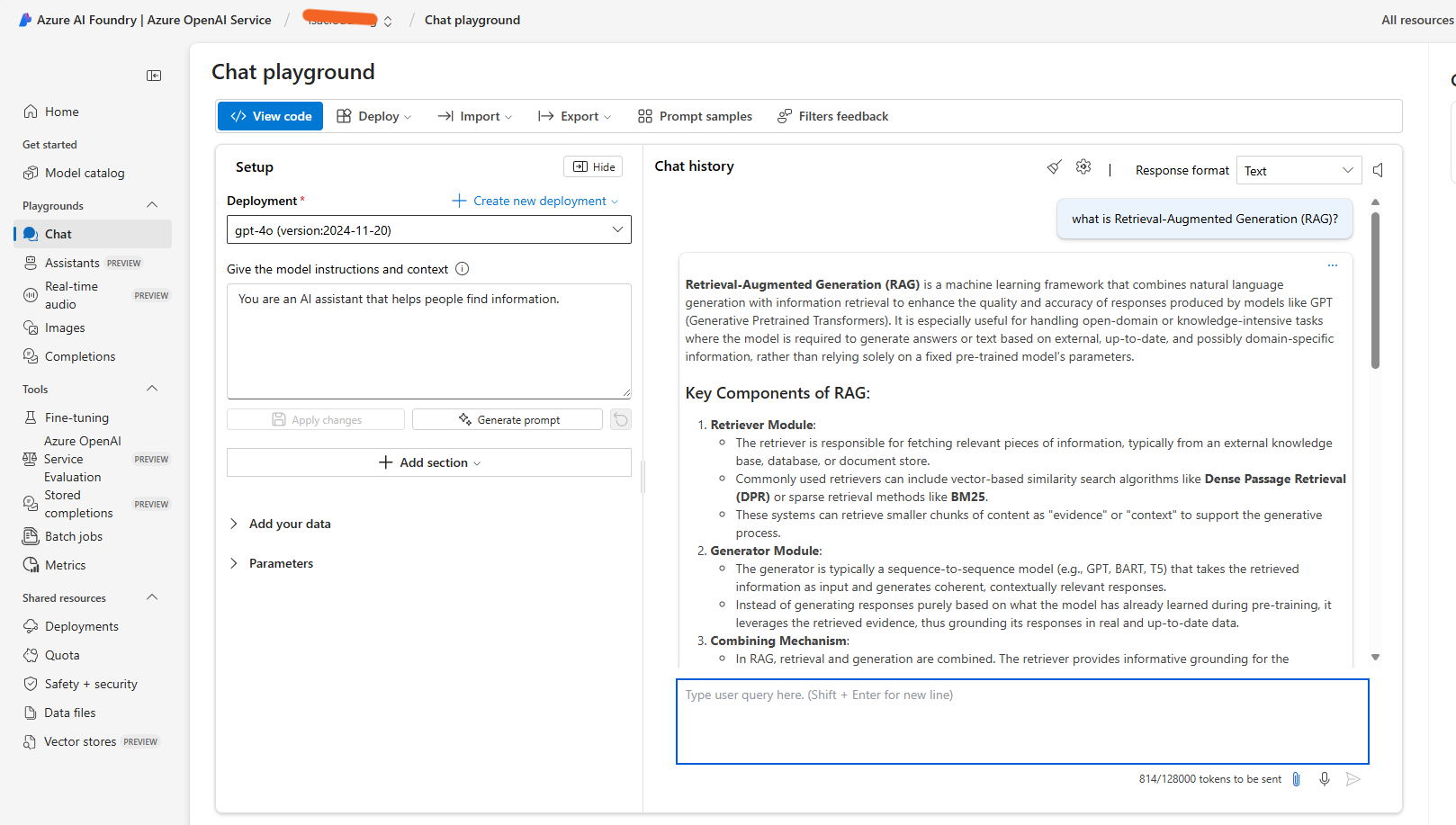

Step 3: Test the OpenAI Endpoint

Once the model deployment done, we can go to Playground menu to test our model.

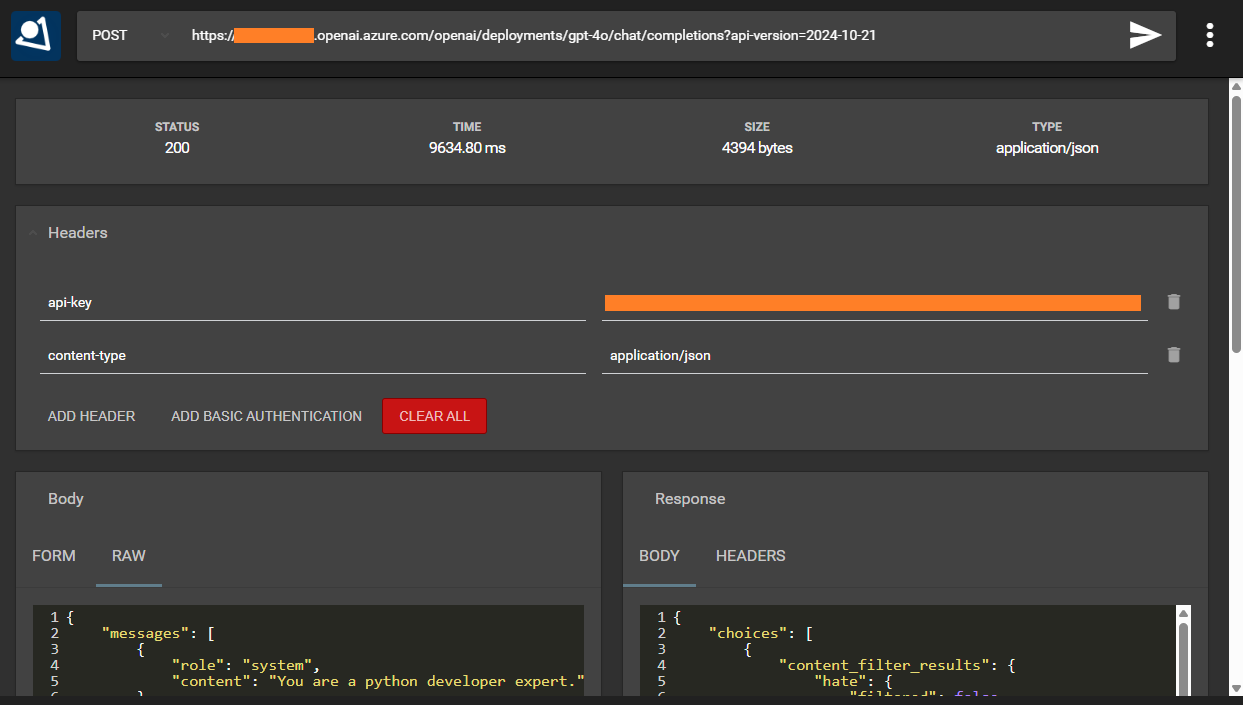

Now, you can use your own OpenAI endpoints in your application without errors. Below is using RESTMan extension to call the Azure OpenAI endpoints via REST API way.

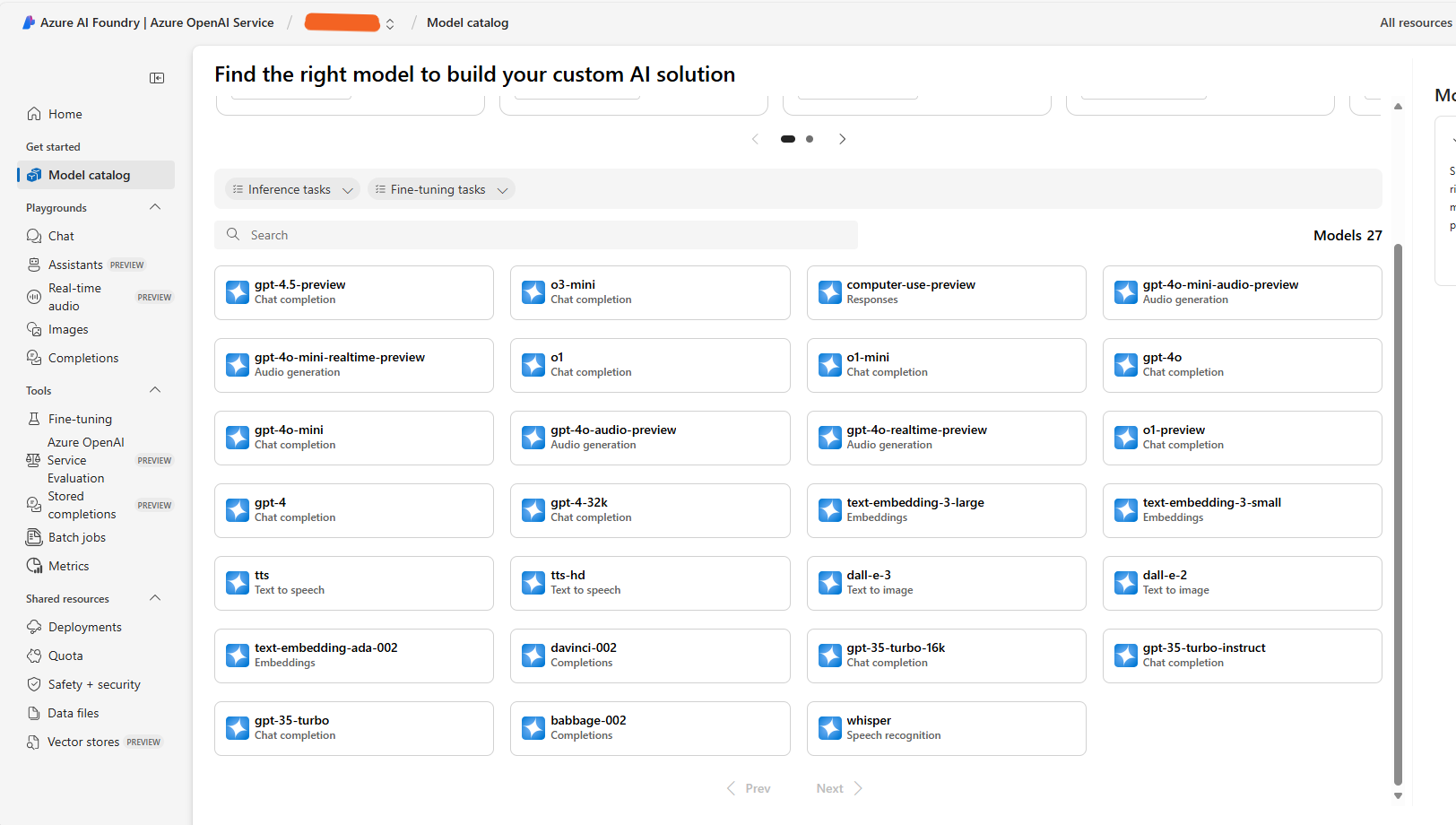

There are many models in Azure AI Foundry, you can choose the model you want to use.

Hope this article helps you to create your own OpenAI endpoints in Azure Cloud.