Background: The Challenge of Manual Simulator Management

In our development workflow, we rely heavily on a diagnosis simulator that mimics real device behavior by responding to device data requests through MQTT. This simulator is crucial for testing various scenarios without requiring actual hardware. The simulator operates in two separate environments:

- Stage environment: Used for development and pre-production testing

- Production environment: Used for final testing and validation

Both environments run as Docker containers on remote AWS EC2 instances, providing isolated and consistent testing environments. However, managing these simulators manually has become a significant pain point for our team:

- Manual container management: Starting, stopping, and restarting containers requires SSH access and Docker commands

- Configuration updates: Modifying simulator device data for different test scenarios involves editing JSON files and container restarts

- Team accessibility: Only team members with AWS access and Docker knowledge can manage the simulators

- Time-consuming processes: Each configuration change requires multiple manual steps

To address these challenges, I decided to build a Model Context Protocol (MCP) server that would automate simulator management and make it accessible to all team members through familiar AI interfaces.

What is MCP (Model Context Protocol)?

The Model Context Protocol (MCP) is an open-source standard that enables AI assistants to securely connect to external data sources and tools. Think of it as a bridge between AI models and real-world applications, allowing AI assistants to interact with your systems in a controlled and secure manner.

Key Benefits of MCP:

- Standardized Integration: MCP provides a uniform way for AI models to access external tools and data

- Security: All interactions are controlled and auditable

- Extensibility: Easy to add new tools and capabilities

- Multi-client Support: Works with various AI clients like Claude Desktop, GitHub Copilot, and Goose

- Developer Friendly: Simple SDK for building custom servers

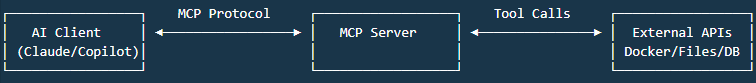

MCP Architecture:

The common architecture involves an AI client (like Claude or Copilot) communicating with an MCP server that exposes specific tools. The MCP server, in turn, interacts with external systems such as Docker containers or databases. Below is common architecture screenshot:

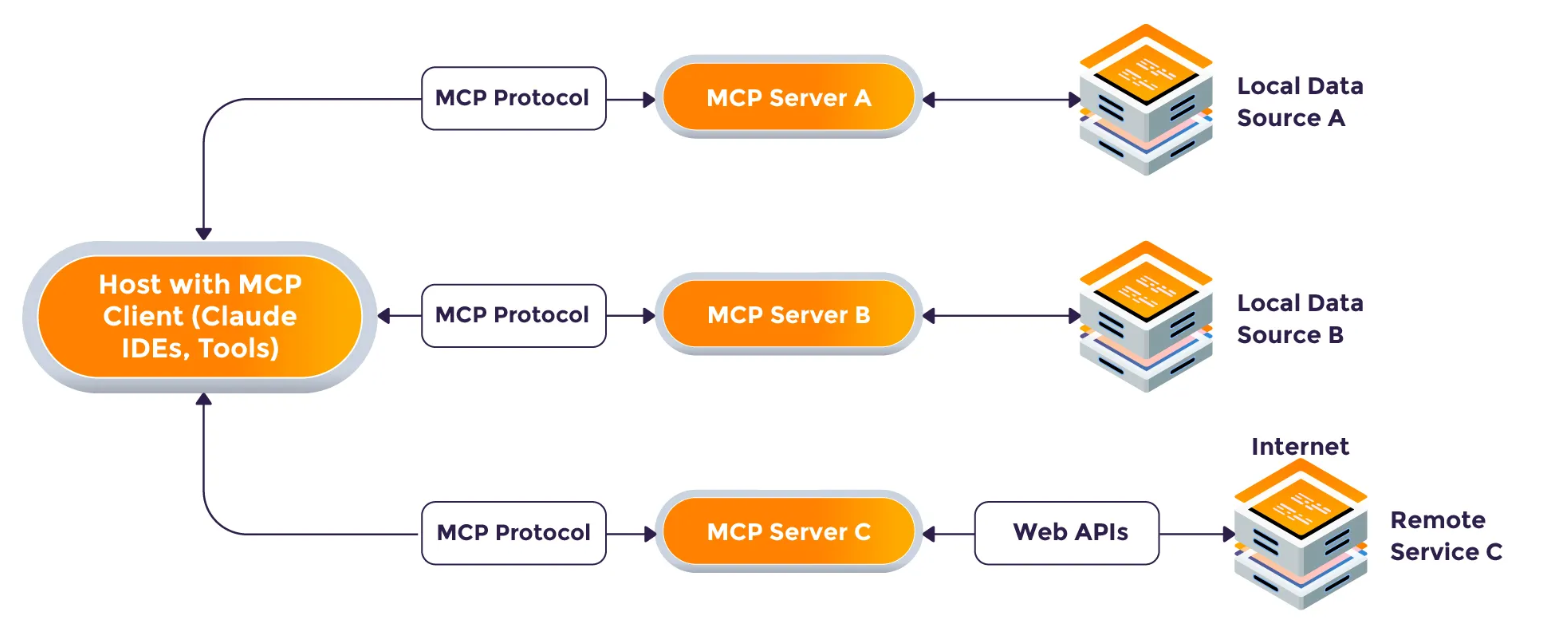

There is a growing ecosystem of AI clients that support MCP, allowing users to leverage their preferred tools while interacting with custom MCP servers. Examples include:

- Claude Desktop: A popular AI assistant that can connect to MCP servers for enhanced capabilities

- GitHub Copilot: Integrated into Visual Studio Code, enabling developers to use MCP tools directly from their IDE

- Goose: A open source lightweight AI assistant that can also connect to MCP servers for various tasks

The MCP server acts as a middleware layer, exposing specific tools that AI clients can use to interact with your systems safely and efficiently.

Building Our Diagnosis Simulator MCP Server

Project Structure and Setup

Our MCP server is built using the FastMCP framework, which simplifies the process of creating MCP-compliant servers in Python. Here’s our project structure:

1 | diagnosis-simulator-mcp/ |

Dependencies and Configuration

First, I define our project dependencies in pyproject.toml:

1 | [project] |

The MCP Python SDK provides everything we need to build a robust server with minimal boilerplate code.

Core Server Implementation

Let’s break down our server implementation:

1. Server Initialization and Configuration

1 | import argparse |

2. Docker Container Management

We created utility functions to manage Docker containers:

1 | def restart_container(env: str): |

3. Configuration Management

Our simulator uses complex JSON configuration files. We implemented functions to read, update, and manage these configurations:

1 | def read_config_json(env: str) -> Any: |

4. MCP Tools Definition

The heart of our MCP server is the tool definitions. Each tool is exposed to AI clients as a callable function:

1 |

|

The FASTMCP framework automatically generates the necessary MCP metadata for these tools, making them discoverable by AI clients. It is also supportss custom routes for additional endpoints: for example, a health check endpoint. If you want to add a health check endpoint, you can do it like below:

1 | # Health check endpoint, in case needed for monitoring |

5. Advanced Configuration Updates

One of the most complex features is the ability to update nested JSON configurations. Our simulator config has a structure like:

1 | { |

Our update_simulator_data tool supports:

- Nested updates:

device_info.result.device_info.fwVersion - List item updates:

diagnosis-wifi.result.diagnosis-wifi.SSID - List replacement:

command_list.result.command_list

Running the Server

The server can be started with configuration parameters:

1 | uv run python server.py \ |

Or using environment variables with provided scripts:

run_server.batfor Windowsrun_server.shfor Linux/macOS

Demo: Using the MCP Server

Our MCP server works with multiple AI clients. Here are examples of how it can be used:

Visual Studio Code with GitHub Copilot

Let’s host this MCP server at the remote AWS EC2 instance which the simulator containers are running. The MCP server is accessible over the internet with proper security measures in place. The address like: http://remote-server-address:8000/mcp.

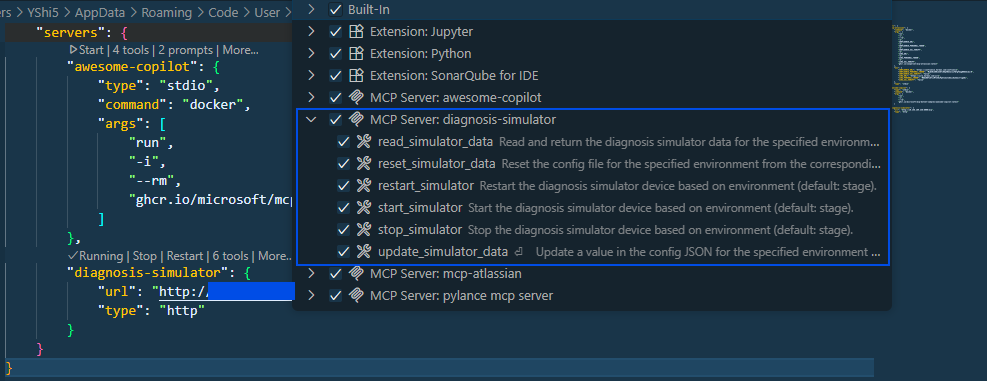

In Visual Studio Code, we can leverage GitHub Copilot to interact with our MCP server. To config this remote MCP server in Copilot, you can add the following to your mcp.json:

1 | { |

Once connected to GitHub Copilot, you can see the available MCP tools and their descriptions.

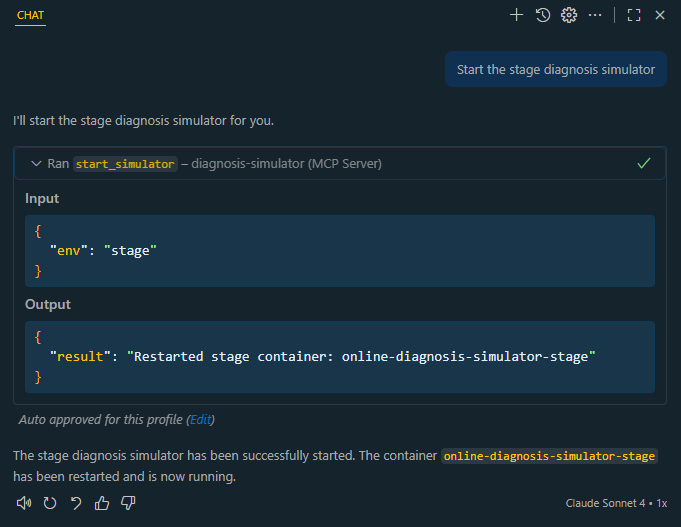

Now you can use natural language to interact with your simulators:

Example interactions:

- “Start the stage diagnosis simulator”

- “Update the firmware version in production environment to 22.22.22.22.22”

- “Change the Network WiFi status in stage environment to ‘Disconnected’”

- “Stop the production simulator”

- “Read the current configuration for stage environment”

- “Reset the prod simulator configuration to default”

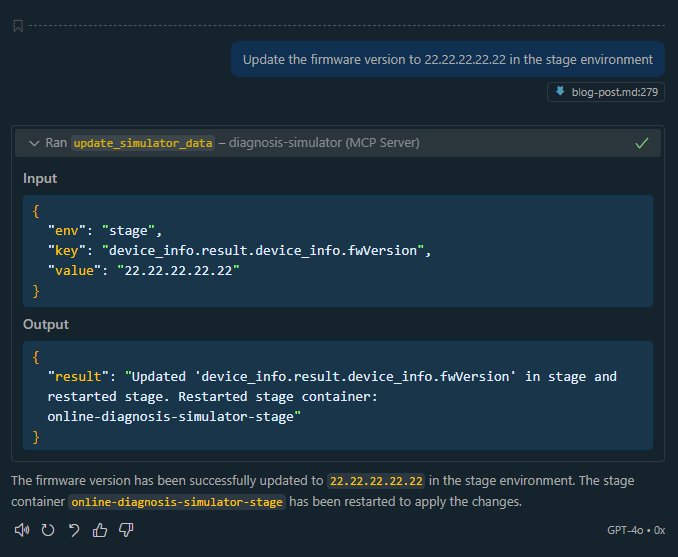

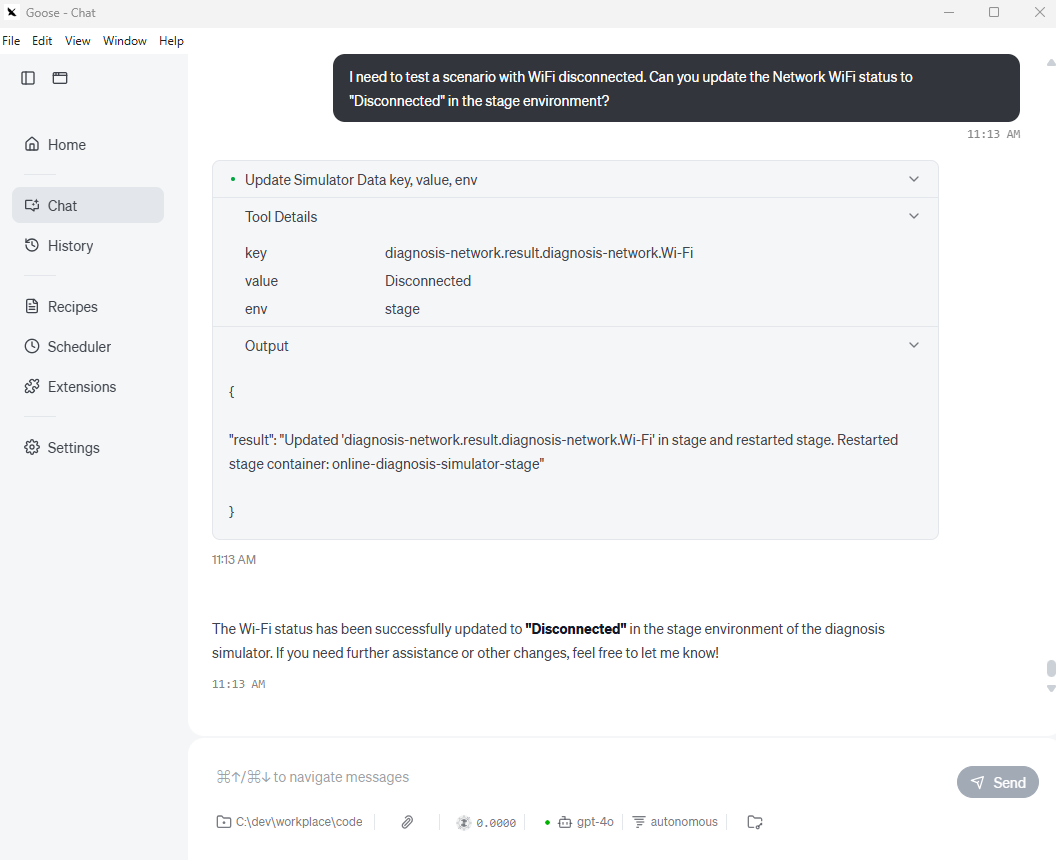

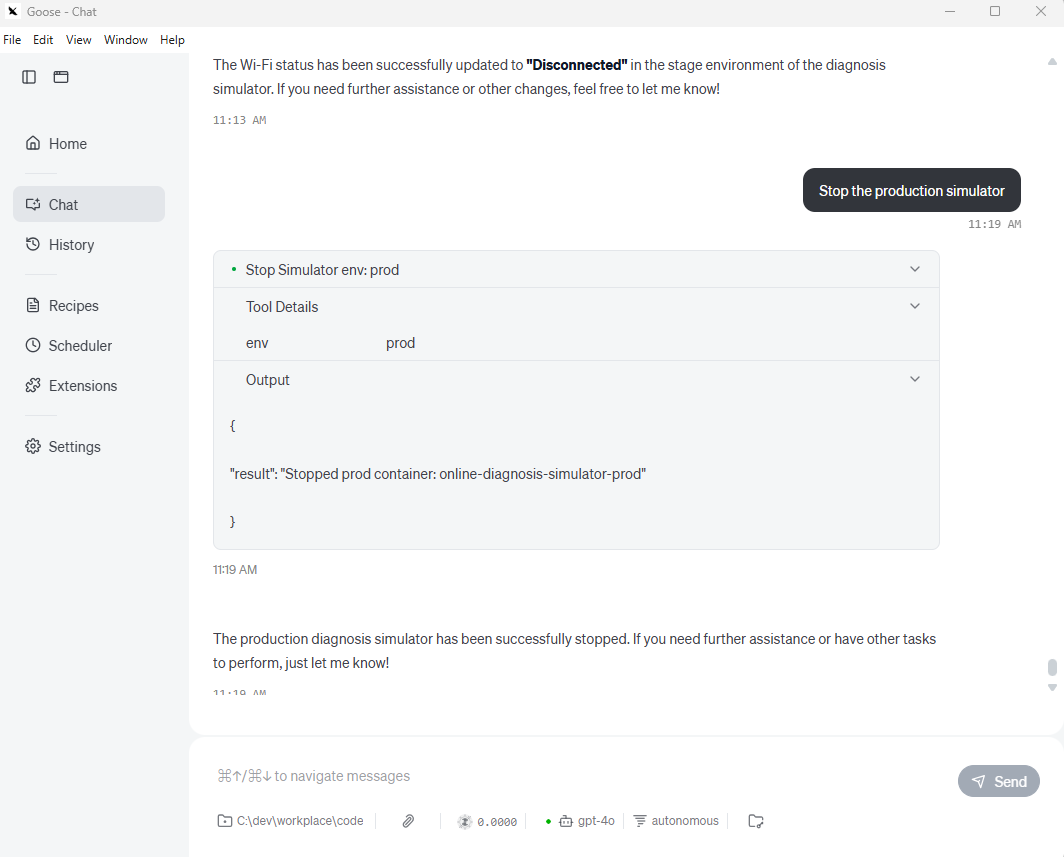

You can see below screenshots of how the interaction looks in GitHub Copilot and Goose clients. The AI client translates your natural language requests into precise MCP tool calls, executes them, and returns the results.

Github Copilot Screenshots:

Screenshot 1: Start Simulator

1 | 🤖 GitHub Copilot |

Screenshot 2: Update Simulator Device Firmware

1 | 🤖 GitHub Copilot |

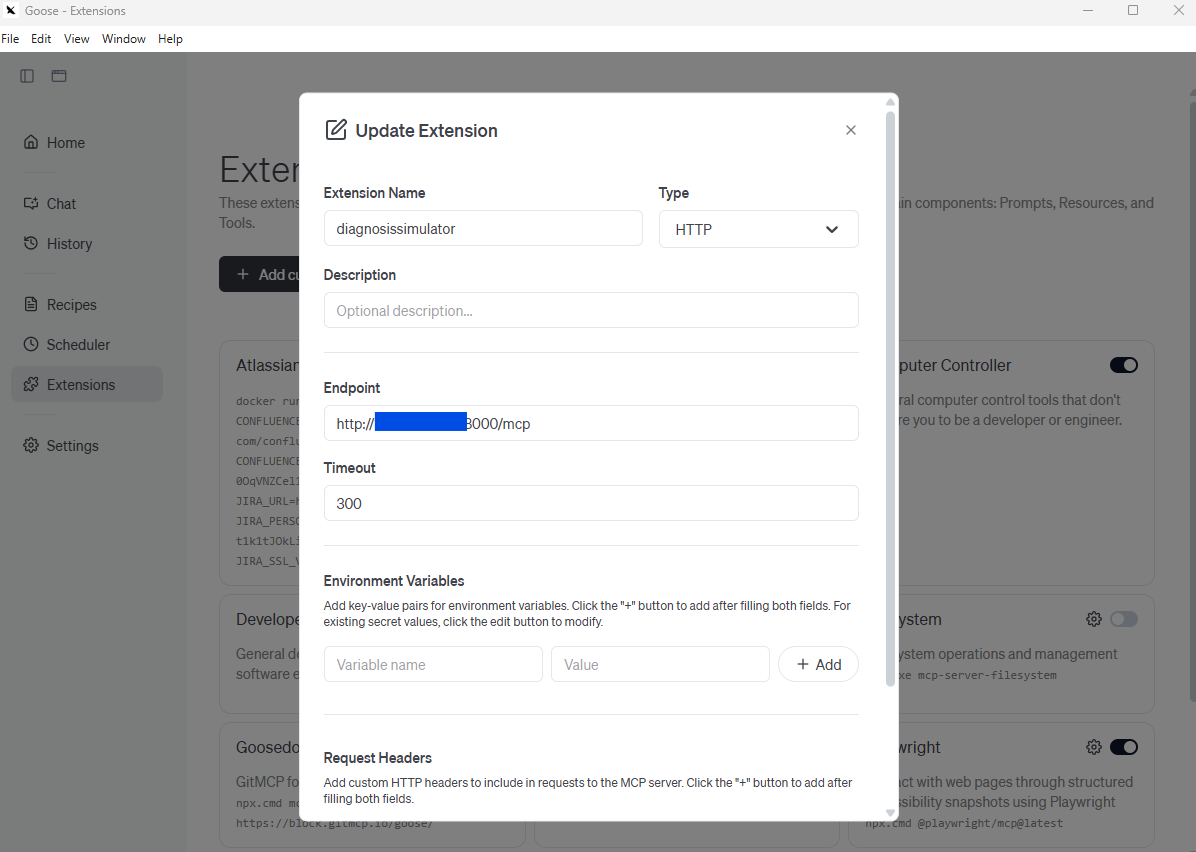

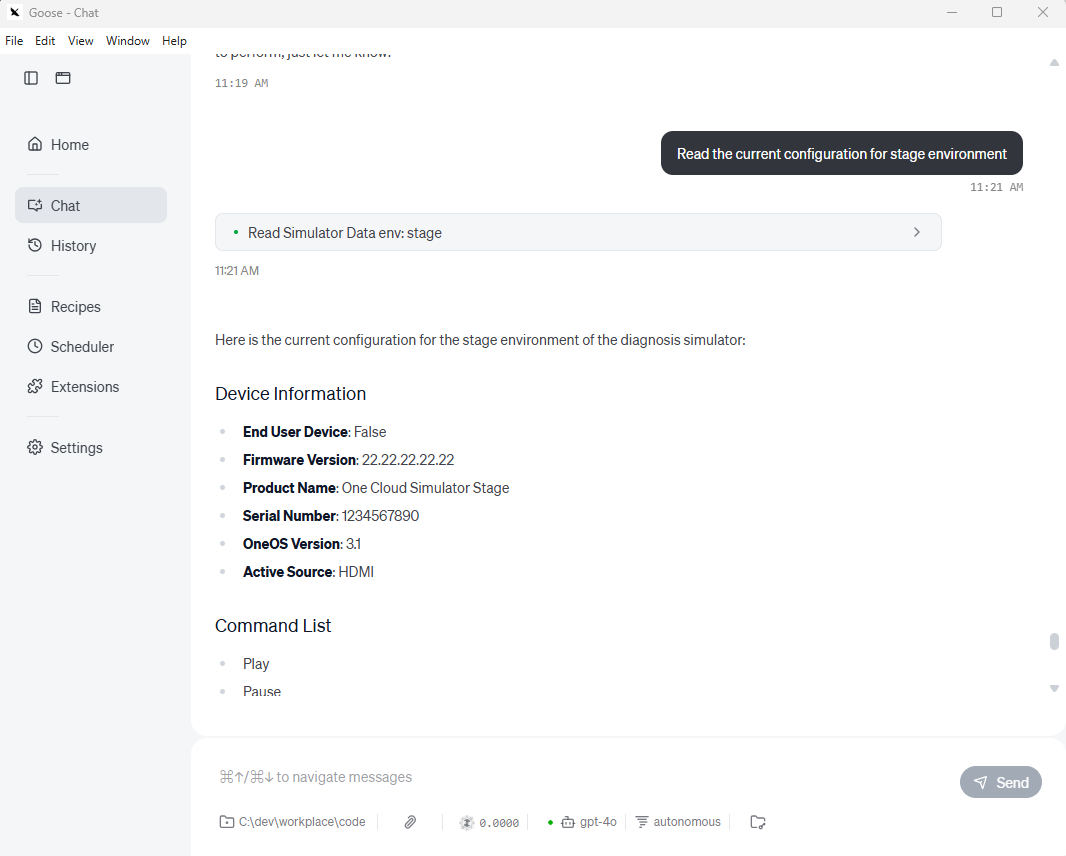

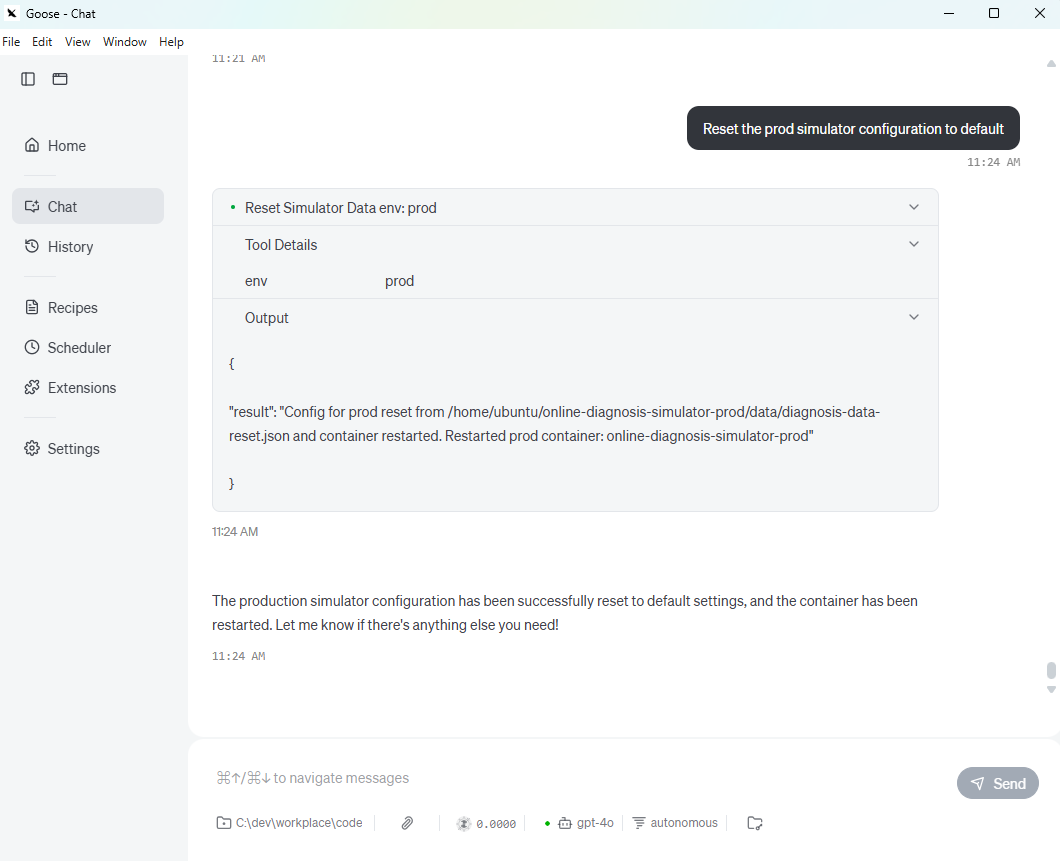

Goose Client Integration

Goose is another open source AI client that can connect to MCP servers and automates engineering taks. We can create a custom extension in Goose to connect to our diagnosis simulator MCP server.

Goose Screenshots:

Screenshot 1: Update Simulator WiFi Status

1 | 🪿 Goose |

Screenshot 2: Stop Simulator

1 | 🪿 Goose |

Screenshot 3: Read Simulator Configuration

1 | 🪿 Goose |

Screenshot 4: Reset Simulator Configuration

1 | 🪿 Goose |

We tested the MCP server 6 tools with both GitHub Copilot and Goose clients. The interactions were smooth, and the AI clients successfully translated natural language requests into MCP tool calls, allowing us to manage our diagnosis simulators without needing direct Docker or AWS access.

Key Features Demonstrated:

- Natural Language Interface: Team members can use everyday language instead of Docker commands

- Environment Safety: Clear separation between stage and prod environments

- Automatic Validation: Configuration updates are validated before application

- Immediate Feedback: Real-time status updates and error messages

- No Technical Expertise Required: Anyone can manage simulators through AI chat

Benefits and Impact

Since implementing our MCP server, we’ve seen significant improvements in our development workflow:

Time Savings

- Before: 5-10 minutes per configuration change (SSH + Docker commands + manual file editing)

- After: 30 seconds via natural language AI interaction

- Daily impact: Saves 2-3 hours per developer per day

Team Accessibility

- Before: Only 3 team members could manage simulators

- After: All 12 team members can manage simulators

- Result: Reduced bottlenecks and improved testing velocity

Error Reduction

- Automated validation: Prevents invalid configurations

- Environment safety: Clear separation prevents prod accidents

Developer Experience

- Natural language: No need to remember Docker commands or JSON paths

- Multi-client support: Works with preferred AI tools

- Self-documenting: Tool descriptions provide built-in help

Summary

Building an MCP server for our diagnosis simulator management has transformed how our team approaches testing and development. What started as a solution to eliminate manual Docker container management has evolved into a powerful automation platform that makes complex infrastructure accessible through simple AI conversations.

Key Takeaways:

- MCP Simplifies Integration: The protocol provides a standardized way to connect AI assistants to real-world systems

- FastMCP Reduces Complexity: The Python SDK makes building MCP servers straightforward

- Natural Language APIs: AI clients can translate human intent into precise tool calls

- Team Empowerment: Complex technical operations become accessible to non-technical team members

- Scalable Architecture: Easy to extend with new tools and capabilities

Future Enhancements:

- Monitoring Integration: Add health checks and performance metrics

- Advanced Scheduling: Support for automated test scenarios

- Multi-Environment Support: Extend beyond stage/prod to support feature branches

- Integration Testing: Automated test execution after configuration changes

The combination of MCP’s standardized protocol and AI’s natural language interface creates a powerful paradigm for infrastructure management. By wrapping our technical operations in MCP tools, we’ve made our development infrastructure more accessible, reliable, and efficient.

Whether you’re managing Docker containers, cloud resources, or any other technical systems, consider building an MCP server to bridge the gap between AI assistance and your operational needs. The investment in building these tools pays dividends in team productivity and system reliability.